Siggraph Asia, 2024

Authors

Sunwoo Kim, Minwook Chang, Yoonhee Kim, Jehee Lee

Project Site

Abstract

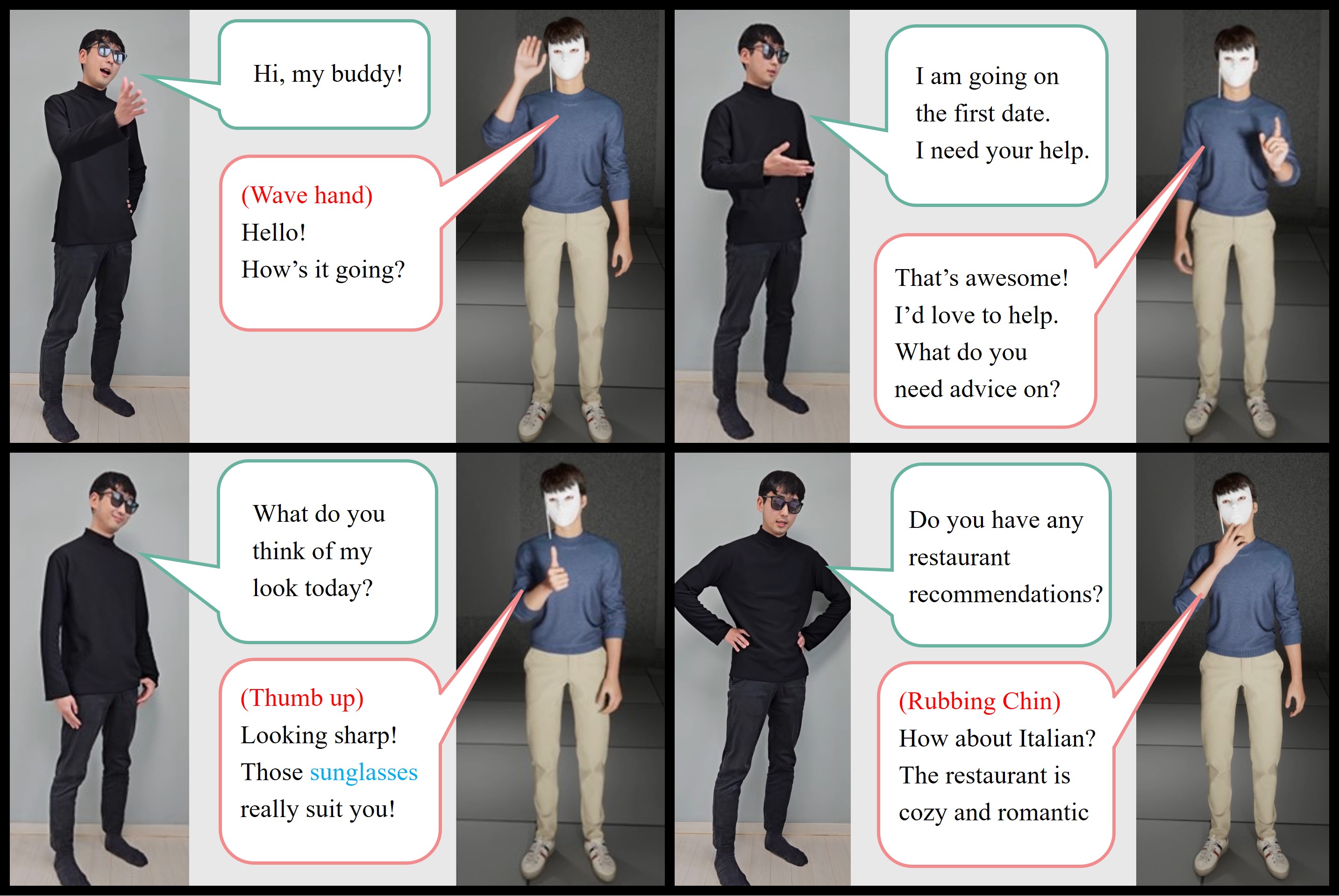

Creating intelligent virtual agents with realistic conversational abilities necessitates a multimodal communication approach extending beyond text. Body gestures, in particular, play a pivotal role in delivering a lifelike user experience by providing additional context, such as agreement, confusion, and emotional states. This paper introduces an integration of motion matching framework with a learning-based approach for generating gestures, suitable for multimodal, real-time, interactive conversational agents mimicking natural human discourse. Our gesture generation framework enables accurate synchronization with both the rhythm and semantics of spoken language accompanied by multimodal perceptual cues. It also incorporates gesture phasing theory from social studies to maintain critical gesture features while ensuring agile responses to unexpected interruptions and barging-in situations. Our system demonstrates responsiveness, fluidity, and quality beyond traditional turn-based gesture-generation methods.